Docker is a container system that allows us to isolate applications. This last detail is very important since it distinguishes it from other container systems such as OpenVZ. The key concept in Docker is that applications are launched, not containers.

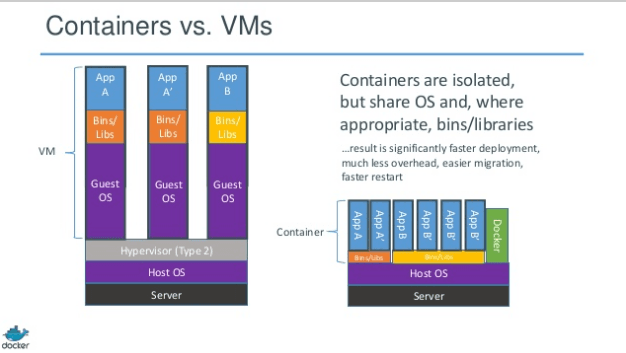

The use of containers represents a great advantage compared to complete virtualization such as KVM. Through containers, we will obtain a greater density of machines on each computing node, but on the other hand, we will be limited to running Linux-based operating systems since they will share the same kernel image.

Another interesting aspect that differentiates Docker from other container-based systems is that if appropriate, the basic libraries of the operating system are also shared, resulting in significant space savings:

The key concept in Docker is that applications are launched, not containers. That is, a container is started in which the app is launched. In a traditional container system, the container is launched and the application is launched inside it.

Having a container started is only the consequence of launching the app. When it finishes its execution, the container is NO longer necessary and is automatically turned off.

Docker relies on several existing technologies:

- NameSpaces: Isolation between containers

- Cgroups: Resource control

- UnionFS: Changes are applied in layers, that is, part of a base image in RO and changes are added. When an image is committed, it becomes the base layer in RO mode.

- The UnionFS file system can be: AUFS, btrfs, vfs, and DeviceMapper

This article is composed of the following sections:

INSTALLATION

The first step is to compile the kernel of our computing node with support for CGROUPS. If we forget to enable any option when compiling the Docker ebuild, a warning will appear indicating the option to enable within the kernel.

We compile Docker:

app-emulation/docker -aufs btrfs contrib device-mapper doc lxc overlay vim-syntax zsh-completion

We start the service and add it to the boot:

rc-update add docker default

NOTE: If we want the containers to have access to the outside without NAT, we must enable forwarding:

net.ipv4.ip_forward = 1

net.ipv4.conf.all.forwarding = 1

BASIC USAGE

Docker has a remote image system called Dockerhub, which has both certified and third-party images. To do some initial testing with Docker, it may be useful to resort to them, but as we will explain later, we will use our own image.

We run bash inside the container, -i: STDIN input, -t: pseudo-terminal

exit

We see that the container has stopped as soon as we exited the shell, which makes sense since Docker runs applications NOT containers.

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

We leave a task running inside the container:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8b68c1646f22 jgkim/gentoo-stage3 "/bin/sh -c 'while t 3 seconds ago Up 1 seconds trusting_lovelace

We can see the standard output of our container:

hello world

hello world

hello world

hello world

We can also see them in tail -f style:

Stop the container:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

We can enter the container and NOT close the session, screen style:

Crtl+PQ

To re-enter:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

25d21650646e jgkim/gentoo-stage3 "/bin/bash" 14 seconds ago Up 12 seconds mad_pare

NOTE: If two sessions are attached, the behavior is very similar to a screen session ;)

This is all well and good, but we CANNOT trust a Gentoo image that we have not installed ourselves, so we download the stage3 and import it into docker:

docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

gentoo latest 264d2b032543 6 minutes ago 773.1 MB

Update the operating system and install basic tools:

emerge --sync

emerge eix htop vim

eix-sync

emerge -uDav world

emerge --depclean

python-updater

exit

COMMITS

Changes in a docker container are very similar to changes in a git repo. When we exit, we must commit the changes or we will lose them:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9a5a0b8c7f13 gentoo "/bin/bash" 6 minutes ago Exited (0) 2 seconds ago grave_colden

Let’s commit the changes so that from now on it serves as a base image:

docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

gentoo/updated latest 4eedf047deb9 47 seconds ago 1.175 GB

gentoo latest 264d2b032543 14 minutes ago 773.1 MB

NOTE: To avoid Docker file corruption, pause the container before committing. If we want to avoid it, we will use the parameter -p false:

At this point, we could package our container and distribute it if we wish:

To import:

docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

gentoo_imported latest b1c4c04c1d2b About a minute ago 1.345 GB

gentoo/updated latest 4eedf047deb9 5 minutes ago 1.175 GB

gentoo latest 264d2b032543 19 minutes ago 773.1 MB

NOTE: There is a difference between save and export. With save, you can roll back to any previous state of the container, while with export, you only have the last state.

Packaging containers is rarely done since the ideal is to have your own registry server to store images.

Let’s do a rollback test:

rm -rf /etc/*

Oops, we messed up…

No problem, since we haven’t committed, we only lose the changes:

docker run -t -i gentoo/updated /bin/bash

ls -la /etc/passwd

-rw-r--r-- 1 root root 651 Jul 23 04:40 /etc/passwd

We may make a mistake and not even know it. In that case, the only solution is to revert to a commit where we know everything works correctly:

rm -rf /etc/*

exit

docker ps -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2500d9c520ea gentoo/updated "/bin/bash" About a minute ago Exited (0) 3 seconds ago ecstatic_colden

docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

gentoo/updated_sinpasswd latest c3b9f653bf61 5 seconds ago 1.175 GB

gentoo_imported latest b1c4c04c1d2b 5 minutes ago 1.345 GB

gentoo/updated latest 4eedf047deb9 9 minutes ago 1.175 GB

gentoo latest 264d2b032543 23 minutes ago 773.1 MB

ls -la /etc/passwd

-rw-r--r-- 1 root root 651 Jul 23 04:40 /etc/passwd

Now let’s imagine that we have made several really laborious configurations in addition to the error. We are not going to discard all our work just because we made a mistake. The steps to follow would be:

- Commit the changes

- Enter a previous commit (it may be more convenient to use volumes)

- Copy the deleted files

- Exit

- Enter the latest commit

- Restore the deleted files

- Exit

- Commit the changes

A very useful command is docker diff which will show us the changes between the last entry in the container and the last commit:

rm /etc/passwd

exit

The last entry:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f269a364a153 gentoo/updated "/bin/bash" 22 seconds ago Exited (0) 9 seconds ago evil_hawking

We compare the state of the last entry (22 seconds ago) with the state of the last commit of the image: gentoo/updated

C /root

C /root/.bash_history

C /etc

D /etc/passwd

We can see that several files from the bash history have been created and /etc/passwd has been deleted.

NETWORKING

Docker uses iptables to redirect traffic from the host machine to the container. To test this, we install Apache:

emerge apache

cd /etc/apache2/vhosts.d/

rm *

vi 00_kr0m.conf

Listen 80

NameVirtualHost *:80

<VirtualHost *:80>

ServerAdmin kr0m@alfaexploit.com

DocumentRoot /var/www/html

ServerName *

ErrorLog /var/log/apache2/docker_http.error_log

CustomLog /var/log/apache2/docker_http.access_log combined

DirectoryIndex index.php index.htm index.html

<Directory "/var/www/html">

Options -Indexes FollowSymLinks

AllowOverride All

Order allow,deny

Allow from all

</Directory>

</VirtualHost

vi /var/www/html/index.html

<html><body><h1>DockerWeb by kr0m</h1></body></html>

ServerName localhost

Commit the changes:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1049a59f7816 gentoo/updated "/bin/bash" 3 hours ago Exited (0) 5 seconds ago trusting_perlman

We start the container, but this time indicating that the WAN:7777 port will be redirected to LAN:80.

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

18d6cd9c9521 gentoo/apache "/usr/sbin/apache2 - 7 seconds ago Up 4 seconds 0.0.0.0:7777->80/tcp dreamy_lalande

We check that the redirection works correctly:

<html><body><h1>DockerWeb by kr0m</h1></body></html>

NOTE: In some pre-installed Apache containers, we see that a script is launched directly:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

169027bdabbe fedora/apache "/run-apache.sh" 17 seconds ago Up 15 seconds 80/tcp fedoreando

cat run-apache.sh

#!/bin/bash

rm -rf /run/httpd/* /tmp/httpd*

exec /usr/sbin/httpd -D FOREGROUND

We can see the redirection of port 7777 to the container’s IP through iptables:

Chain DOCKER (2 references)

target prot opt source destination

DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:7777 to:172.17.0.18:80

The internal network created by Docker can be seen through ifconfig:

eth0: flags=67<UP,BROADCAST,RUNNING> mtu 1500

inet 172.17.0.7 netmask 255.255.0.0 broadcast 0.0.0.0

ether ae:76:9a:64:c5:05 txqueuelen 0 (Ethernet)

veth1e84f17: flags=67<UP,BROADCAST,RUNNING> mtu 1500

inet6 fe80::ac76:9aff:fe64:c505 prefixlen 64 scopeid 0x20<link>

ether ae:76:9a:64:c5:05 txqueuelen 1000 (Ethernet)

We can see the bridge created, and all containers that are on the same bridge will be accessible to each other:

bridge name bridge id STP enabled interfaces

docker0 8000.ae769a64c505 no veth1e84f17

NOTE: As we can see, the bridge does not add the eth0 interface but veth1e84f17, whose MAC is identical to that of eth0.

Docker can use another mode of interconnection between containers called “linking system”, which is based on the name of the containers to establish data flows.

This system has a great advantage, and that is that NO port is exposed to the outside, the data passes through the tunnel created by Docker.

Connectivity is achieved by:

- Environment variables

- Modifying the /etc/hosts

Stop and delete the previous container:

docker rm 18d6cd9c9521

Create the database container:

emerge dev-db/mysql

emerge --config =dev-db/mysql-5.6.24

vi /etc/mysql/my.cnf

bind-address = 0.0.0.0

mysql -uroot -p’XXXX'

use mysql

grant all privileges on *.* to root@'172.17.0.%' identified by 'XXXX';

flush privileges;

exit

docker ps -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5be78a416521 gentoo/updated "/bin/bash" 13 minutes ago Exited (0) 2 seconds ago high_newton

Commit the changes:

Start the container indicating the MySQL name:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

aae0431579ec gentoo/mysql "/usr/bin/mysqld_saf 6 seconds ago Up 4 seconds MySQL

Allow traffic between containers:

Start the Apache container linking it with MySQL:

cat /etc/hosts

172.17.0.18 mydb aae0431579ec MySQL

PING mydb (172.17.0.18) 56(84) bytes of data.

64 bytes from mydb (172.17.0.18): icmp_seq=1 ttl=64 time=0.078 ms

64 bytes from mydb (172.17.0.18): icmp_seq=2 ttl=64 time=0.026 ms

NOTE: The linking options are --link CONTAINER_NAME:ALIAS --link MySQL:mydb

This way we can refer to the database as mydb within the container.

We install the mysql client to make a test connection:

dev-db/mysql -bindist -cluster -community -debug -embedded -extraengine -jemalloc -latin1 -max-idx-128 minimal -perl -profiling -selinux -ssl -static -static-libs -systemtap -tcmalloc -test

virtual/mysql -embedded minimal -static -static-libs

mysql -h mydb -uroot -p’XXXX'

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 1

Server version: 5.6.24-log Source distribution

NOTE: If a container is restarted, it will be assigned a new IP address. In this case, the hosts file will be automatically updated in all containers linked to it.

VOLUMES

Volumes are data that can be shared and reused between containers. A basic example could be the following:

echo alfaexploit.com > /webapp/asd

docker run -t -i –name Apache -v /webapp:/webapp gentoo/apache /bin/bash

cat /webapp/asd

alfaexploit.com

NOTE: This way, several servers can access the same information in real-time.

A good trick is to put all logs in the same directory with subdirectories per container:

-v /var/log/container00:/var/log

It also allows you to mount it as RO:

-v /src/webapp:/opt/webapp:ro

Or even mount isolated files:

-v ~/.bash_history:/.bash_history

It is possible to copy files from a container without having to enter it:

cat run-apache.sh

Volumes can also be interesting if we want to avoid using technologies such as NFS.

CLEANING

Delete all containers that have exited:

Delete the old ones:

SECURITY

Some basic security recommendations are:

- Configure minimum capabilities: Access to certain system resources is allowed

- GRsec: Security patches at the kernel level

- Auditd: Syscall auditing

- Docker-KVM: Guest code is only executed once, all containers run within this KVM

- AppArmor: Profiles of allowed actions

- Use your own base image and registry server

TROUBLESHOOTING

- https://github.com/wsargent/docker-cheat-sheet

- dmesg

- brctl show

- iptables -L -n

- tail -f /var/log/docker.log

- docker top CONTAINERID

- docker stats CONTAINERID

- docker history IMAGE_NAME

- docker stats $(docker ps -q)

- Enable DEBUG:

- vi /etc/conf.d/docker

- DOCKER_OPTS="-log-level debug"

- /etc/init.d/docker restart