Having redundancy for the OS is almost as important as having redundancy for the data itself. The failure of the OS can imply considerable downtime depending on the services installed on it. In this article, we will see how to convert our zroot pool to mirror mode, so that the OS data will be duplicated on as many disks as we want.

Before continuing with the article, it is advisable to review this previous one so that all the concepts are clear.

When you want to convert a bootable pool to mirror, in addition to adding the disks to the vdev, you will have to replicate the partition scheme of the original disk since there must be a copy of the bootloader on all disks.

In this case, we are going to convert the zroot pool to mirror:

pool: zroot

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

vtbd0p4 ONLINE 0 0 0

errors: No known data errors

We check the available disks (it is a CBSD VM with virtio-blk disks):

crw-r----- 1 root operator 0x61 Nov 13 22:51 /dev/vtbd0

crw-r----- 1 root operator 0x63 Nov 13 22:51 /dev/vtbd0p1

crw-r----- 1 root operator 0x64 Nov 13 22:51 /dev/vtbd0p2

crw-r----- 1 root operator 0x65 Nov 13 23:51 /dev/vtbd0p3

crw-r----- 1 root operator 0x66 Nov 13 22:51 /dev/vtbd0p4

crw-r----- 1 root operator 0x62 Nov 13 22:51 /dev/vtbd1

We replicate the partition scheme on the second disk, which must be the same size or larger than the first one:

We check that both disks have the same scheme:

=> 40 20971696 vtbd0 GPT (10G)

40 409600 1 efi (200M)

409640 1024 2 freebsd-boot (512K)

410664 984 - free - (492K)

411648 4194304 3 freebsd-swap (2.0G)

4605952 16365568 4 freebsd-zfs (7.8G)

20971520 216 - free - (108K)

=> 40 20971696 vtbd1 GPT (10G)

40 409600 1 efi (200M)

409640 1024 2 freebsd-boot (512K)

410664 984 - free - (492K)

411648 4194304 3 freebsd-swap (2.0G)

4605952 16365568 4 freebsd-zfs (7.8G)

20971520 216 - free - (108K)

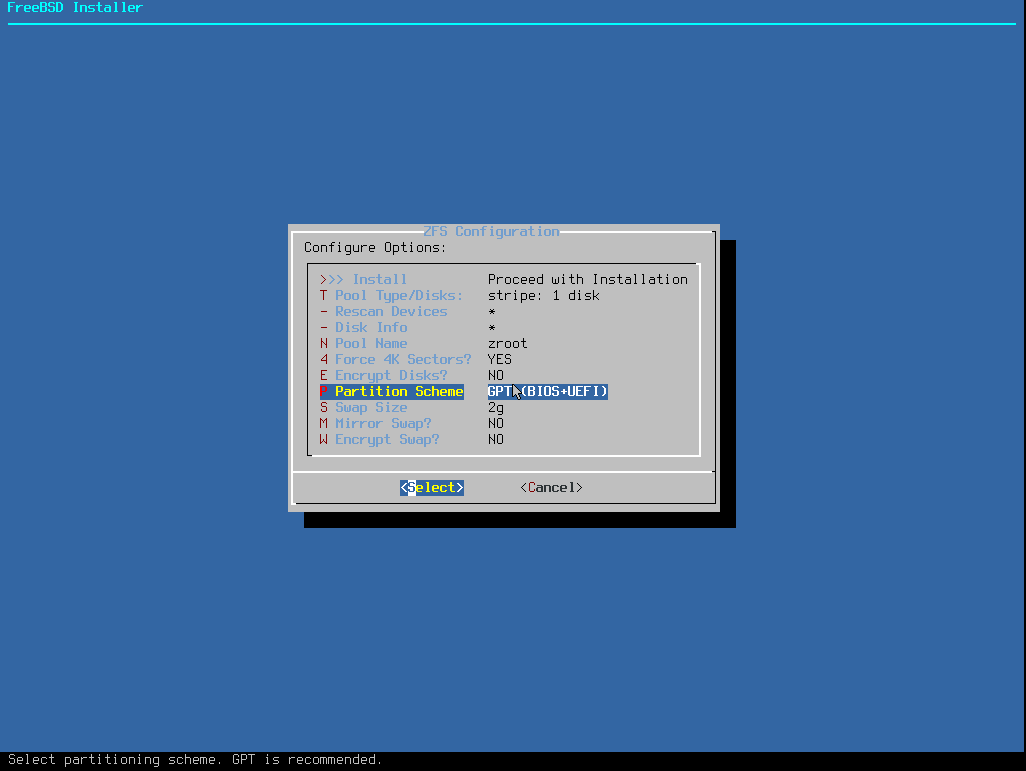

We can see that both the EFI partition and the traditional boot partition used by BIOS exist. This is because when the OS was installed, GPT(BIOS+UEFI) was chosen, which is the most compatible mode since the disk can be booted by both systems.

We add the disk to the zroot pool:

Make sure to wait until resilver is done before rebooting.

If you boot from pool 'zroot', you may need to update

boot code on newly attached disk 'vtbd1p4'.

Assuming you use GPT partitioning and 'da0' is your new boot disk

you may use the following command:

gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 da0

When inserting a new disk, the data must be copied from one disk to another. This process is called resilvering, and we can see the progress in the output of the zpool status command:

pool: zroot

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sat Nov 14 00:02:32 2020

823M scanned at 51.5M/s, 803M issued at 50.2M/s, 824M total

794M resilvered, 97.43% done, 0 days 00:00:00 to go

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

vtbd0p4 ONLINE 0 0 0

vtbd1p4 ONLINE 0 0 0

errors: No known data errors

When the status finishes, it will appear as resilvered:

pool: zroot

state: ONLINE

scan: resilvered 823M in 0 days 00:00:15 with 0 errors on Fri Nov 13 23:03:12 2020

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

vtbd0p4 ONLINE 0 0 0

vtbd1p4 ONLINE 0 0 0

errors: No known data errors

We copy the bootstrap code to the vtbd1 EFI/BIOS partition, so there will be no problems regardless of which disk it boots from.

Let’s remember the partition scheme we have:

=> 40 20971696 vtbd1 GPT (10G)

40 409600 1 efi (200M)

409640 1024 2 freebsd-boot (512K)

Depending on the type of boot we are using, we will install the bootloader in one partition or another in a different way. Let’s remember that we have installed the OS in GPT(BIOS/UEFI) mode, so we have two partitions, one EFI and one freebsd-boot. Therefore, we will indicate with the -i parameter where we want to install the bootloader.

UEFI:

partcode written to vtbd1p1

BIOS: In addition to copying the data to the partition, this system also writes the MBR of the indicated disk.

partcode written to vtbd1p2

bootcode written to vtbd1

If we disconnect the vtbd0 disk, the system will boot without problems, but the pool will be degraded:

pool: zroot

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: http://illumos.org/msg/ZFS-8000-4J

scan: resilvered 823M in 0 days 00:00:16 with 0 errors on Sat Nov 14 00:02:48 2020

config:

NAME STATE READ WRITE CKSUM

zroot DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

4177867918075153002 FAULTED 0 0 0 was /dev/vtbd0p4

vtbd0p4 ONLINE 0 0 0

errors: No known data errors

We can choose to remove the failed disk or replace it, let’s see both cases.

Remove it:

root@Melocotonazo:~ # zpool status

pool: zroot

state: ONLINE

scan: resilvered 823M in 0 days 00:00:16 with 0 errors on Sat Nov 14 00:02:48 2020

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

vtbd0p4 ONLINE 0 0 0

errors: No known data errors

Replace it:

pool: zroot

state: DEGRADED

status: One or more devices could not be opened. Sufficient replicas exist for

the pool to continue functioning in a degraded state.

action: Attach the missing device and online it using 'zpool online'.

see: http://illumos.org/msg/ZFS-8000-2Q

scan: resilvered 823M in 0 days 00:00:14 with 0 errors on Sat Nov 14 11:52:53 2020

config:

NAME STATE READ WRITE CKSUM

zroot DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

17957430762927248855 UNAVAIL 0 0 0 was /dev/vtbd0p4

vtbd1p4 ONLINE 0 0 0

errors: No known data errors

We prepare the replacement disk:

root@Melocotonazo:~ # gpart bootcode -p /boot/boot1.efifat -i 1 vtbd0

partcode written to vtbd0p1

We perform the replacement:

Make sure to wait until resilver is done before rebooting.

If you boot from pool 'zroot', you may need to update

boot code on newly attached disk 'vtbd0p4'.

Assuming you use GPT partitioning and 'da0' is your new boot disk

you may use the following command:

gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 da0

We can see that it is performing the disk resilver:

pool: zroot

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sat Nov 14 11:59:42 2020

823M scanned at 103M/s, 460M issued at 57.4M/s, 824M total

455M resilvered, 55.80% done, 0 days 00:00:06 to go

config:

NAME STATE READ WRITE CKSUM

zroot DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

replacing-0 UNAVAIL 0 0 0

17957430762927248855 UNAVAIL 0 0 0 was /dev/vtbd0p4/old

vtbd0p4 ONLINE 0 0 0

vtbd1p4 ONLINE 0 0 0

errors: No known data errors

When it finishes, the status will appear as resilvered and we can all sleep peacefully again:

pool: zroot

state: ONLINE

scan: resilvered 823M in 0 days 00:00:15 with 0 errors on Sat Nov 14 11:59:57 2020

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

vtbd0p4 ONLINE 0 0 0

vtbd1p4 ONLINE 0 0 0

errors: No known data errors